With AI, Karen Read, Jonathan Richman, and special guests Alice and the caterpillar!

When I think of hallucinations, I think of the little men who lived on top of my grandmother’s dresser and hung out in the courtyard trees, keeping her company at the nursing home where she spent the last three years of her life. I think of my mother insisting that all sorts of crazy things had been happening in her bedroom when she was up all night shivering and burning with Covid fever. I think of walking down a leafy street in Berkeley on mushrooms as the tree canopy expanded and contracted in great waves of green.

I do not think of the time ChatGPT told me I was born and raised in New York City, graduated from Harvard, got my PhD at Brandeis, and wrote Rip-Roaring Reads for Reluctant Teen Readers, “a guide to engaging young people with literature.” That wasn’t a hallucination; it was simply untrue.

When I saw the NYT headline “AI Is Getting More Powerful, but Its Hallucinations Are Getting Worse,” my first thought was how insane it is that we - as a culture and economy - keep diving deeper into the AI waters. My second thought, especially when I read the sentence “It is not entirely clear why.” was, duh, enshittification. My third thought was to wonder why we call AI’s untruths hallucinations.

I’m using the word untruth to create the simplest juxtaposition. Truth has a lot of antonyms. If I asked you for its opposite, you would likely say lies. If I asked you for the opposite of true, it would probably be false. In these linguistic pairings, truth is what we know to be accurate, and its opposite is generally a version of inaccurate that implies purposefulness. We lie to cover up truths we don’t want to admit, for a myriad of reasons. False fronts hide the truth (that the building is really only one story high, that we don’t actually have bangs). On the other hand, my dissertation advisor blew our minds in a graduate seminar when she pointed out that fiction also stands in opposition to truth, which raises the ethical question of whether fictions are lies, which convulsed the 18th century literati.1

Lie; false, falsehood, falsification; fiction: what these antitheses to truth have in common is that we create and control them—or at least we try to.

Hallucinations, by contrast, are natural phenomena. We may purposefully induce them by consuming hallucinogens, but we cannot control whether or when they show up, their content, or their existence in the world. It should be obvious, then, why hallucination was a useful term for the AI establishment: it naturalizes AI’s untruths and by extension humanizes AI.2

Except AI is neither human nor natural. AI hallucinations exist because people created AI—and then, like Frankenstein’s monster, it escaped their control (as some lies do too). Of course Frankenstein’s monster becomes heartbreakingly human, where AI fakes humanity. That is, the key difference between Frankenstein and an AI Agatha Christie doling out writing advice is that fiction ultimately became an ethical permission structure for untruth, a place where it’s reasonable for a patchwork of body parts to have its own thoughts and feelings. Whereas no matter how friendly, sympathetic, and helpful an AI chatbot may seem, it remains a passthrough for a conglomeration of other people’s words.

A lot of people seem OK with this. But words matter to me, as do the people who actually write words, as does the difference between truths and untruths. AI boosters claim that AI is here to help us, but that’s a patently false front for the truth of the matter: AI is here to make money for big tech. Which is why it’s damn the hallucinations, full speed ahead.

The Way We Live Now

Speaking of truths and untruths, let’s talk about Karen Read.

As the world beyond Massachusetts discovers The Great Trial of 2024, now become The Great Retrial of 2025, I am going to claim my OG status on the subject.3 I read The Boston Globe every day, which means I read the very first story about a cop found dead in the snow on the side of a road outside a party at another cop’s house because his girlfriend had apparently hit him while backing up in a blizzard. Sound confusing? That’s only the beginning, as we learned during The Great Trial, which went Massachusetts viral thanks to a vicious blogger (who several months earlier had smeared somebody I know—I told you, I am seriously OG here); the resulting pink shirt movement;4 and now The Great Retrial.

I’m trying hard not to follow The Great Retrial, but of course I have an opinion. Actually, I have two. My first opinion is that all these people, especially the cops, are terrible—there are so many cops in this story, so many varieties of bad cop, on every side, it is absolutely wild.5 The only exceptions to the mass terribleness are the niece and nephew of John O’Keefe (the dead man by the side of the road), who lost both their parents, were taken in by their uncle, then lost their uncle, which is the true tragedy at the heart of this circus of a spectacle. My second opinion is that the prosecution made a huge mistake when they charged Read with second-degree murder. If they had just charged her with manslaughter, this would have been weird, sad, and judicially simple.

OK, fine, I do have a third opinion, which is that she probably hit him by mistake and that’s why he died, but in light of all that has happened since, nobody on either side has any credibility, there is no sieve big enough to separate the proliferating lies from any semblance of truth, and whatever the verdict, we will never know for sure.6

But what I’m particularly interested in at this point is how FoxNews has taken up Karen Read. On the one hand, Fox is Murdoch is all about the clickbait especially the crime clickbait, so of course they have. On the other hand, Fox is Murdoch is rightwing is pro-cop, so a story about the misdeeds of every variety of cop—and not only that but a whole bunch of white male cops so they can’t yell DEI—seems a tad off brand.

My daughter says Republicans know and accept that there are bad cops because it lets them blame all the problems on bad individuals not bad systems, which is certainly a piece of what’s happening here. But I think we also need to consider the fact that Fox is trying their hardest not to let Trump’s base see what he and his administration are really up to. As I wrote this yesterday, the website’s only above-the-fold Trump stories were Alcatraz and Obama’s library. The other top stories were Canada, Jill Biden, Joe Biden, the conclave, Nike and trans youth athletes…and Karen Read. No ICE, no DOGE, no budget cuts, no vaccines, no Supreme Court, not even Harvard, neither above the fold nor below.

So Trump and his allies are yet again sacrificing his base (cops!) to preserve their delusions,7 and Karen Read has become a Fox false front. What a life that woman lives.

Because we still deserve nice things…

Jonathan Richman. Especially The Modern Lovers and I, Jonathan.

Look, I am fundamentally a 70s white girl from Boston, so of course Jonathan Richman. But I swear Jonathan spent his whole set gazing soulfully into my eyes one night in the 90s at Freight and Salvage, which even as it was happening I thought was my wishful delusion (which is different from hallucination), but after the show, my stepbrother, who was in the back (I was in the front), said something along the lines of wow, Jonathan sure was staring soulfully into your eyes all night, so it must have been true, which means I can legitimately tell you that Jonathan strumming his guitar and staring into your eyes is very nice, as are Jonathan’s songs, as is Roadrunner, Joshua Clover’s book about “Roadrunner” (yes, an entire book about one song, the first in a series Joshua edited), which I finally finished this week, if by nice in this case we mean brilliant and true, as sometimes we do.

If you feel like cosplaying an English grad student, you can read the article, “The Rise of Fictionality,” that eventually emerged from this line of thinking.

Wikipedia traces the usage history of the term hallucination in AI, including critiques similar to mine. Its emergence can certainly be explained as scientists grabbing a useful descriptor without considering its broader implications because they are scientists, not linguists or English professors. But, being of the English professor persuasion, it’s pretty easy for me to see it as a surfacing of the unconscious, staking of ground, or (and?) denial of complicity. Just saying…

At this point, you have three options:

You’ve never heard of Karen Read, in which case you face the choice of whether to google her or go on with your life in happy ignorance.

You know that Karen Read was accused of killing her boyfriend, something happened with the first trial, and now she is being tried again, in which case you know enough to keep reading. (Also, if you qualified for Option 1, you now qualify for Option 2.)

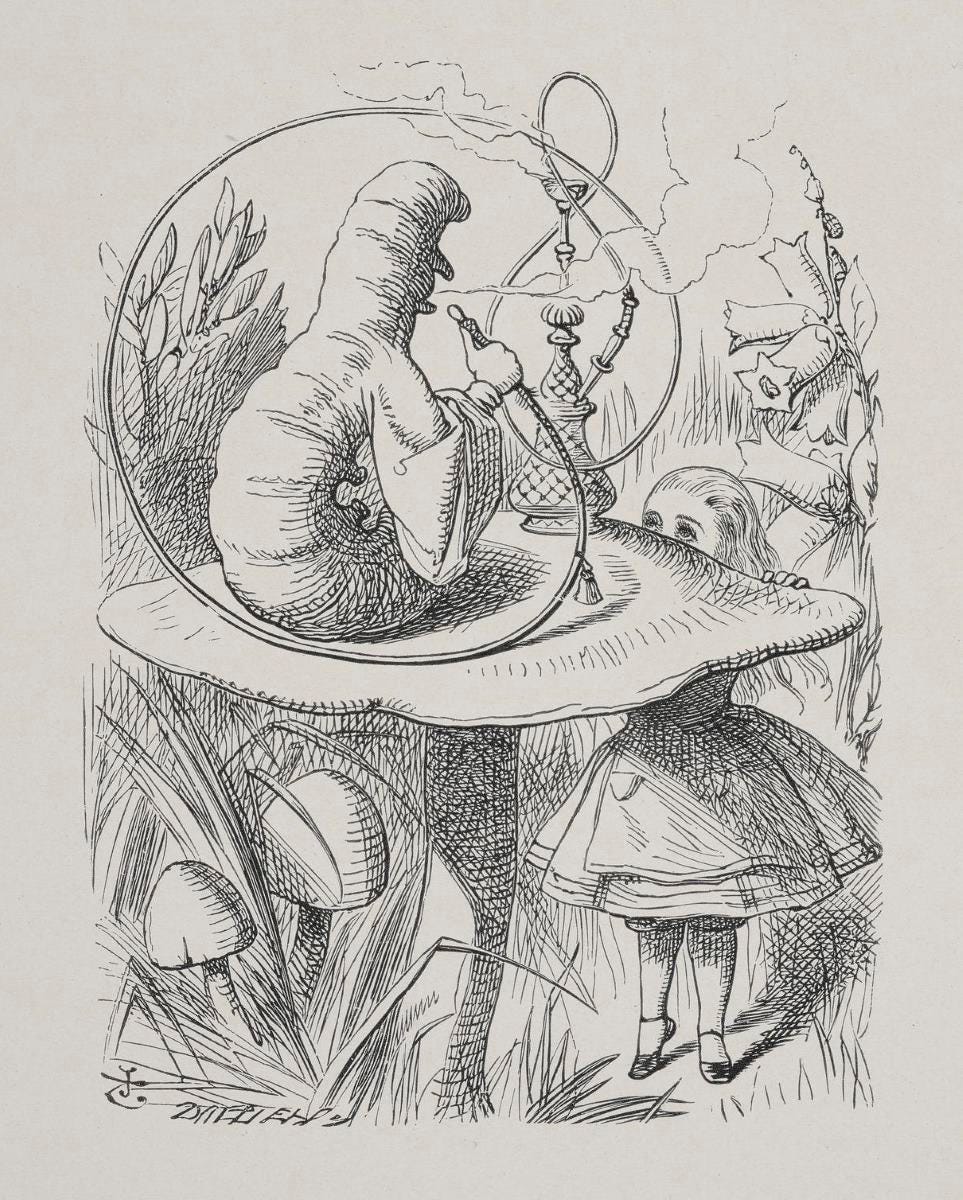

You have already fallen down this rabbit hole, in which case you should keep on doing you (I say this with full empathy, regardless of whether you are Team Karen, Team Brian Albert’s Dog, or, god forbid, Team Turtleboy).

Again, google at your peril.

If you want more terrible Massachusetts cops just next door to these terrible Massachusetts cops, google “Sandra Birchmere Stoughton.”

Just for the heck of it, I asked ChatGPT if it thought she was guilty. It gave me a report on the trial and said the case was currently unresolved. I asked again if it thought she was guilty. It said there were strong arguments on both sides but none of them were “indisputably conclusive.” I asked if it would have found her guilty in the first trial. It said it would have voted not guilty because the prosecution didn’t prove guilt beyond reasonable doubt (which is the conclusion the jurors came to on two of the three counts). At that point, I got bored.

Yes, that’s a purposefully ambiguous pronoun.

someone in my ceramics class was telling me all about karen read the other day but she failed to mention that it was in MA so I failed to care

Well .. you have lovely eyes. Also, can we talk about how confident and gaslighting AI is? I think this is what scares me most. AI is sociopathic on a lot of levels —if we’re going to humanize AI, which is the point .. to create a smooth as silk sociopath.